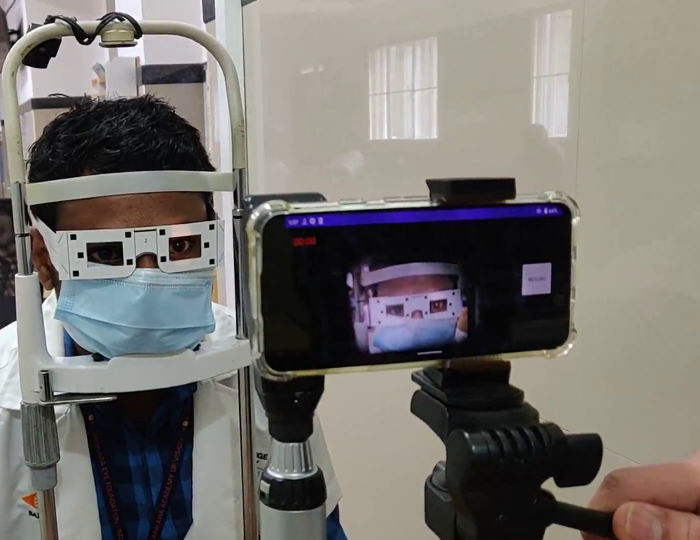

Aditya AggarwalI am a masters student in the CSE department at UC San Diego since Fall 22. I am currently working at Google in Privacy, Safety and Security as a SWE Intern focusing on reducing the OpEx cost of manual review systems. I am also working as a Graduate Researcher in the Cognitive Robotics Lab on the Home Robot project under the mentorship of Prof. Henrik Christensen Previously, I was a Research Intern at Microsoft Research, India, in the Technology and Empowerment group, where I work closely with Dr. Nipun Kwatra and Dr. Mohit Jain at the intersection of HCI, Computer Vision and Healthcare. My primary focus was on developing a low-cost smartphone based diagnostic solution for detecting eye diseases. I have also contributed to multiple open-source projects for the organization Robocomp. I graduated from IIIT Hyderabad in 2020 with a B.Tech (Honors), where I was advised by Prof. K Madhava Krishna and Prof. Ravi Kiran Sarvadevabhatla . I worked mainly on vision-guided robot navigation and human activity understanding. I am currently looking for full-time software engineering / machine learning opportunities starting January 2024, so if you have a position available or just want to say hi, you can always mail me. Email / GitHub / Google Scholar / LinkedIn / Resume / |

|

Experience

|

ResearchI'm interested in problems involving computer vision, machine learning and robotics with real world applications. My research vision is to enable embodied agents to perceive our dynamic world and make intelligent decisions. |

|

Towards Automating Retinoscopy for Refractive Error DiagnosisAditya Aggarwal, Siddhartha Gairola, Nipun Kwatra, Mohit Jain Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), Volume 6, Issue 3, 2022 arxiv / project page / code / virtual presentation / bibtex / Refractive error is the most common eye disorder and is the key cause behind correctable visual impairment. It can be diagnosed using multiple methods, including subjective refraction, retinoscopy, and autorefractors. Retinoscopy is an objective refraction method that does not require any input from the patient. In this work, we automate retinoscopy by attaching a smartphone to a retinoscope and recording retinoscopic videos with the patient wearing a custom pair of paper frames. The results from our video processing pipeline and mathematical model indicate that our approach has the potential to be used as a retinoscopy-based refractive error screening tool in real-world medical settings. |

|

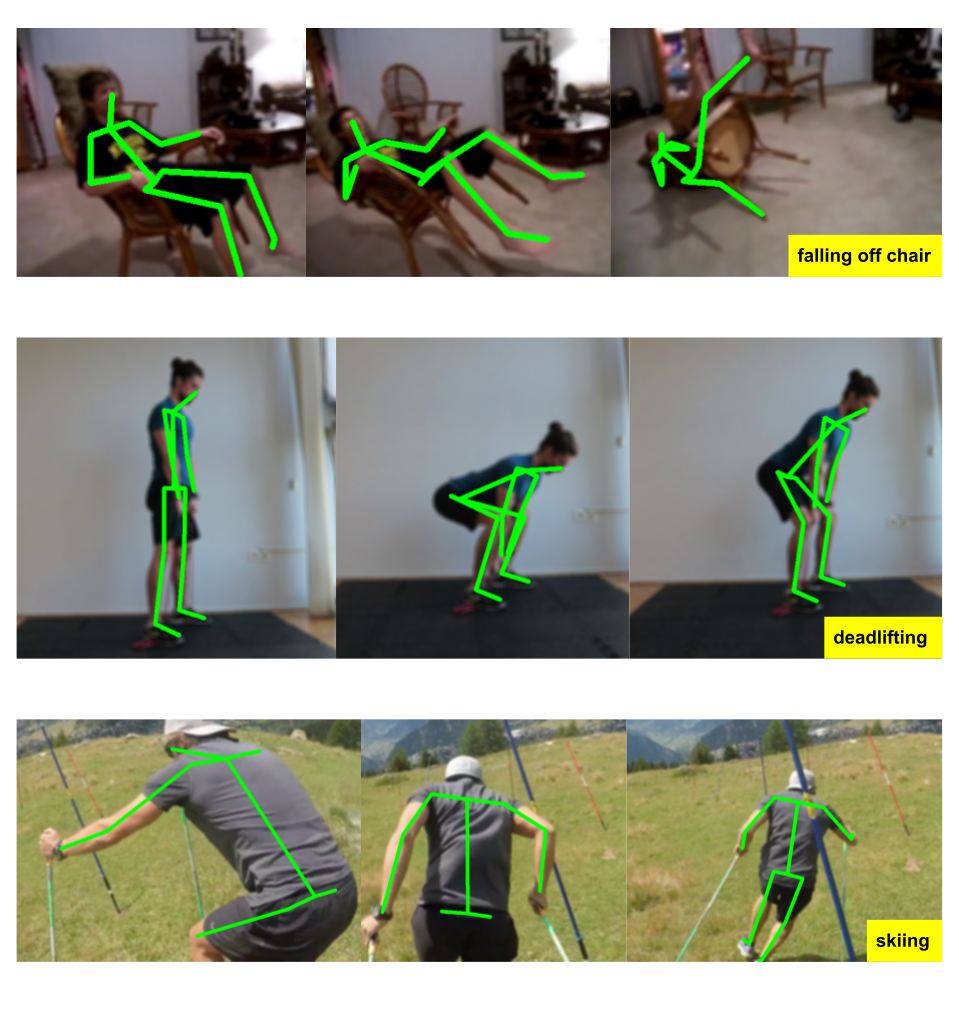

Quo Vadis, Skeleton Action Recognition?Pranay Gupta, Anirudh Thatipelli, Aditya Aggarwal, Shubh Maheshwari, Neel Trivedi, Sourav Das, Ravi Kiran Sarvadevabhatla International Journal of Computer Vision (IJCV), Special Issue on Human pose, Motion, Activities and Shape in 3D, 2021 arxiv / project page / code / video / bibtex / In this work, we study current and upcoming frontiers across the landscape of skeleton-based human action recognition. We benchmark state-of-the-art models on the NTU-120 dataset and provide a multi-layered assessment. To examine skeleton action recognition 'in the wild', we introduce Skeletics-152 and Skeleton-Mimetics datasets. Our results reveal the challenges and domain gap induced by actions 'in the wild' videos. |

|

Reconstruct, Rasterize and Backprop: Dense shape and pose estimation from a single imageAniket Pokale*, Aditya Aggarwal*, KM Jatavallabhula, K Madhava Krishna CVPR Workshop on Long Term Visual Localization, Visual Odometry, and Geometric and Learning-Based SLAM, 2020 arxiv / project page / code / virtual presentation / bibtex / In this work, we present a new system to obtain dense object reconstructions along with 6-DoF poses from a single image. We demonstrate that our approach—dubbed reconstruct, rasterize and backprop (RRB)—achieves significantly lower pose estimation errors compared to prior art, and is able to recover dense object shapes and poses from imagery. We further extend our results to an (offline) setup, where we demonstrate a dense monocular object-centric egomotion estimation system. * Both authors contributed equally towards this work. |

|

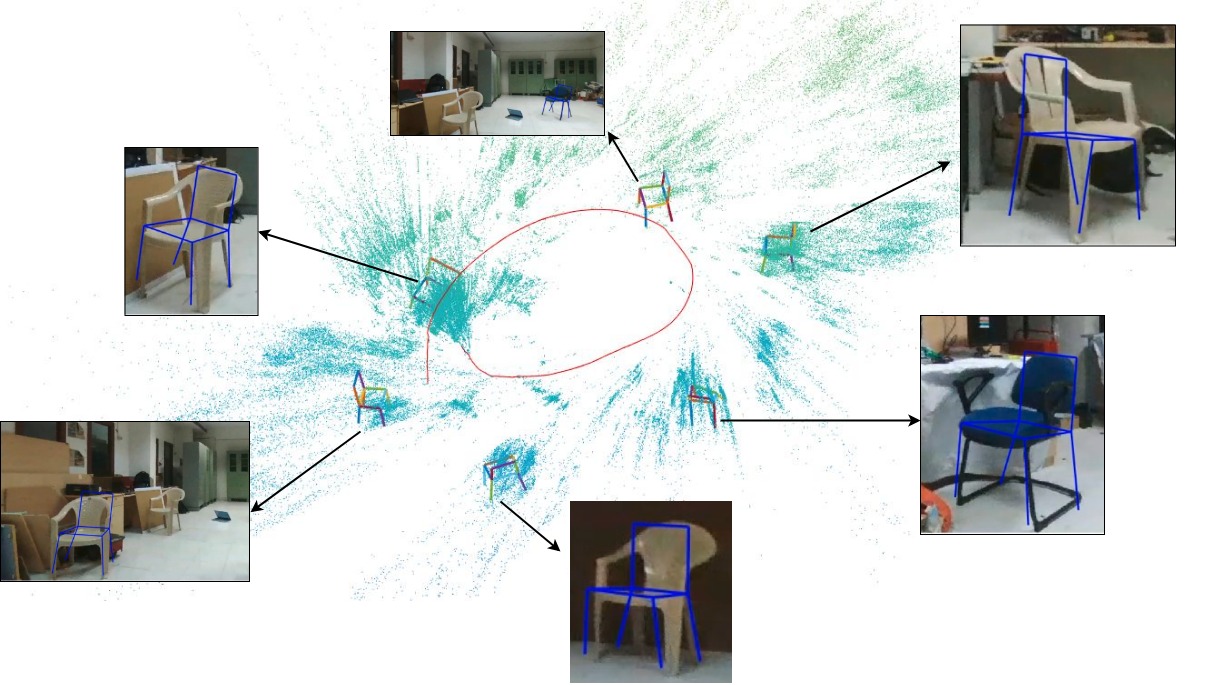

A principled formulation of integrating objects in Monocular SLAMAniket Pokale, Dipanjan Das, Aditya Aggarwal, Brojeshwar Bhowmick, K Madhava Krishna Advances in Robotics (AIR), 2019 ACM Proceedings / video / bibtex / In this paper, we present a novel edge-based SLAM framework, along with category-level models, to localize objects in the scene as well as improve the camera trajectory. We integrate object category models in the core SLAM back-end to jointly optimize for the camera trajectory, object poses along with its shape and 3D structure. We show that our joint optimization is able to recover a better camera trajectory than Edge SLAM. |

Open Source Contributions

|

Education

|

Projects

|